SEO brand visibility in LLMs

LLM brand visibility: SEO strategies

With large language models (LLMs) receiving almost three billion visits a month, SEOs cannot ignore the potential for audience growth that tools like ChatGPT, Gemini, and other LLMs bring to the table. LLMs open up a vast world of opportunities for brands to reach new audiences and tap into extensive sources of information and creativity.

However, how do you go from optimising web pages for ranking in a list-style SERP to showing up in LLMs for relevant questions? LLMs can also offer a fresh perspective on content creation and brand engagement, helping you discover innovative ways to connect with your audience.

In this article, I’ll explore methods and tactics that can help you improve your brand’s visibility in this new channel, leveraging the imaginative capabilities of LLMs to generate creative content and visuals.

Introduction to LLMs

Large language models (LLMs) are advanced AI systems designed to process, understand, and generate human language at scale.

Trained on massive datasets, these language models can answer questions, generate text, and even create images, making them a powerful force in today’s digital landscape. Tools like Microsoft Copilot are at the forefront of this revolution, offering users the ability to interact with technology in more natural and intuitive ways.

As LLMs become increasingly integrated into search engines such as Google Search, the way users discover information and engage with online content is evolving rapidly. For SEO specialists, this shift means rethinking strategies to align with new search intent patterns and optimising for AI-powered search results.

By understanding how large language models interpret and generate content, brands can better position themselves to capture attention in this new era of search, where the ability to generate text and create images is just the beginning of what these AI-powered tools can achieve.

What is LLM optimisation?

LLM optimisation, sometimes referred to as ‘generative engine optimization’, is essentially the act of taking steps to increase references to your brand or website in LLM responses.

For this process, you’ll prioritise LLM platforms (like ChatGPT, Gemini, Copilot, and other LLMs) over traditional search engines when looking to improve audience growth, share of voice, and website traffic. This also means focusing on creating optimised content that is more likely to be referenced by LLMs.

Is LLM optimisation the same as AI overview optimisation?

No, it’s not.

In fact, before we get started, I should clarify that I’m not covering brand visibility in AI overviews in this article. That might seem counterintuitive because AI overviews are a hot topic. Although they affect how searchers click and what is visible online, there’s a fundamental difference between AI overviews and LLMs about the user journey.

People use LLMs for search and information discovery.

They’re not ‘search engines’ in the traditional sense, but people still use LLMs for this purpose. In contrast, AI overviews just appear in search results like a featured snippet or a PAA. AI overviews are designed to provide summarised answers quickly, whereas LLMs allow for deeper, interactive exploration.

Since users never opt in, give consent, or even know for certain that they will see an AI overview, the user journey is completely different. With LLMs, users give feedback, ask follow-up questions, and go deeper—this is the experience that I will explore in this article.

LLMs for search — User journey

Users choose LLMs for search

Users happen upon AI overviews (i.e., overviews do not always trigger for every query)

Users interact with LLM responses

AI overviews in Google Search — User journey

Users receive an AI overview of content

Users control which LLM content they wish to see

AI overviews are generated according to system needs

Why brands should consider LLM optimisation

This essentially comes down to traffic: As of June 2025, ChatGPT brought in around 5.19 billion visits per month, 9 billion monthly page views, and users spent around 6 minutes per session, according to SimilarWeb. That’s a lot of time that a lot of users aren’t spending on traditional search engines.

Traditionally, brands have focused on optimising for organic search to improve visibility and attract traffic through search engines. Still, the rise of LLMs is changing the landscape of how users discover content.

And, ChatGPT isn’t the only one taking this traffic.

How much organic traffic do LLMs get?

Across the web, there’s a whole host of LLM-based chatbot channels that occupy users’ time. Data from SimilarWeb (August 2024) showed that there were almost three billion combined visits to these channels.

LLM platform Monthly visits (August 2024)

ChatGPT - 2.63B

Gemini - 267M

Claude - 70M

Perplexity - 60M

Copilot - 31.36M

These platforms process billions of search queries each month, reflecting the scale at which users interact with AI-driven search and information retrieval.

ChatGPT leads the charge in terms of market share, but there’s a big mix of players that continue to grow their user base.

Are LLMs taking users from Google?

Despite heated speculation, the emergence of these channels has yet to significantly impact Google's market share (at the time of publication), but analysts anticipate that this could change soon.

Gartner predicts a 25% drop in search engine use by 2026, and the same study predicts that organic traffic will also drop by 50% in 2028. If this happens, there will be a colossal change in the way that users acquire information, resulting in a significant impact on SEO as a discipline and marketing channel.

So, how do you optimise for generative search in LLMs?

Understand the differences between different LLM ‘search' channels

Coordinate strategies for your most relevant LLM ‘search' channels

Implement optimisations that support visibility across all LLM ‘search' channels

Monitor your progress

Understand the different types of large language models

Essentially, LLMs search channels fall into two primary categories:

LLMs with static pre-trained model responses

LLMs with search-augmented information retrieval

Each category is powered by a different type of language model, which determines how information is processed and delivered.

Claude, ChatGPT (Free), Gemini*, NotebookLM, and CoPilot app have a fixed training data set, and Links may not be included.

Perplexity, CoPilot (MS365) and ChatGPT (Paid) have a fixed training set with augmented live data from search engines and include links and updates based on web crawling

*Gemini is unique in that it functions like a static pre-trained data LLM. However, (at the time of publication) it is essentially in beta mode, so it will occasionally surface links, but does so very inconsistently.

As a search marketer who’s looking to increase your visibility in one of these LLM tools, the differences here are very significant. They will have an impact on your expected visibility and campaign results.

Optimisations for static data pre-trained response LLMs

This category of LLMs retrieves information based almost exclusively on pre-trained model responses.

When you optimise for brand visibility in these LLMs:

Expect your visibility to change as training data updates

Don’t expect to see links consistently

Adapt your approach for each model

Give feedback on response accuracy

Create well-rounded content to increase the chances of being referenced in static LLMs

Monitor training data updates for visibility changes

Tools like Claude, the Copilot app assistant, ChatGPT’s free models, and (mostly) Gemini are LLMs that predominantly generate answers based on pre-trained data sets without access to live web data.

This means that when users ask them questions, they reply based on what is currently accessible in their bank of knowledge (as opposed to searching the internet for new information).

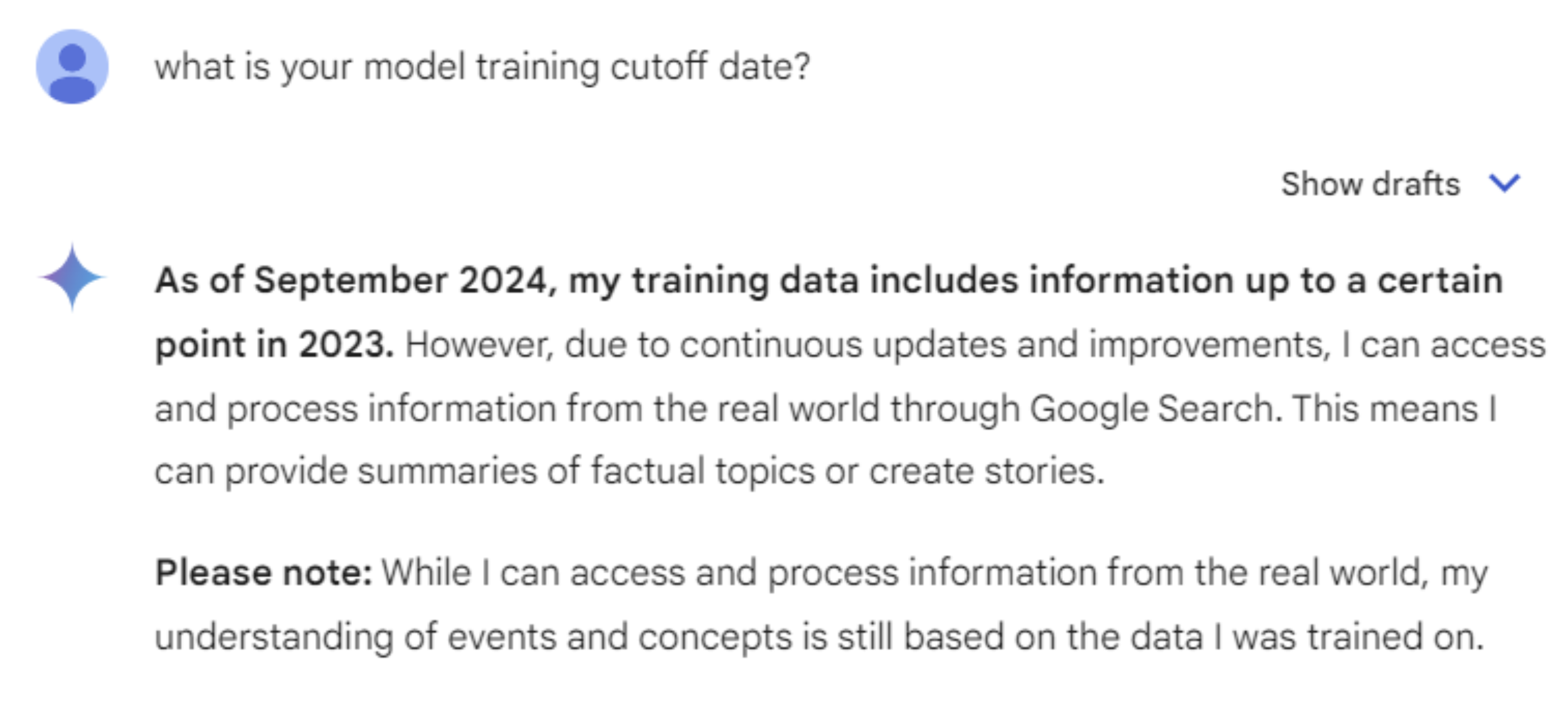

One way to visualise this is to think about asking a question to someone whose source of knowledge is limited to the latest version of an encyclopedia (i.e., they will know what happened up to the date the encyclopedia was published, but will need to wait until the next edition for newly discovered facts). The time between the present day and the last update to the training model is known as the ‘knowledge cutoff date’.

Example of an LLM cut off data period.

Each tool manages its information and datasets differently. How each tool manages its training set impacts the information it references and serves to users, thus also impacting how visible brands and information are within its platforms. For instance, at the time of writing, the training knowledge cutoff date for Claude’s premium model is April 2024 (other tools have different knowledge cutoff dates).

LLM providers regularly announce when they update their training sets (in the same manner as product releases).

As mentioned earlier, each LLM manages its training data differently.

ChatGPT, for instance, uses a tiered system for data freshness, with those paying for GPT-4 Turbo seeing data as fresh as December 2023 and those using the free version of GPT-4 seeing information up to September 2021. Additionally, users sometimes get access to previews of new features and models, which can change what data they access. Gemini is the most complex, though, because Google shares very little information about its workings.

However, for all these LLMs, the knowledge cutoff date for the training set determines whether certain content is eligible for inclusion in the LLM’s responses, making it worthwhile to check and monitor.

Furthermore, if you use visibility tools connected to ChatGPT, check the documentation to confirm the version of the model the tool uses before making adjustments to your strategy or approach. Agencies like Blu Mint can also leverage these visibility tools to monitor LLM visibility for their client projects, ensuring that client content is included and up-to-date in relevant LLM responses.

Optimise for brand mentions (not links)

It’s essential to note that these LLMs rarely, if ever, show links.

This means that your goal for these LLMs should be to be frequently and accurately mentioned for relevant queries (rather than receiving clicks).

When links are shown (as is sometimes the case in Google Gemini), you are unlikely to get links to your latest content, but rather to content that forms part of the most recent training data.

To track your progress toward increasing brand mentions, conduct regular queries for relevant entities and brand terms. You can do this by querying these models in bulk. For example, if you are promoting an AI tool like a portfolio copilot, frequent and accurate mentions in LLM responses can significantly boost its visibility and adoption.

Agencies like Blu Mint have started offering LLM visibility monitoring as a service.

To track your LLM brand visibility, use tools like GPT for Sheets or Ahrefs.

Services like SpyGPT by RiverFlowAI can reveal how often your brand appears in its collection of 250 million ChatGPT queries.

For SEO experts accustomed to traffic as a core KPI, this may seem counterintuitive. But mentions are an important metric for LLMs and may contribute to your overall brand value and even conversions. To prove this, you may want to include ‘ChatGPT’ as an option in customer-facing ‘how did you hear about us’ surveys.

Adapt your approach for each model

Remember that each LLM will have a distinct set of training data, a different update rate, and a unique user journey. Take this into account when speaking with stakeholders and managing your campaigns. If you are unsure of which model you are using, in many cases, you can ask the LLM chatbot directly. Some models may be better at referencing specific functionalities, such as Excel features, depending on the training data they receive.

Familiarise yourself with the release notes and documentation for the LLMs most relevant to your brand, and follow their updates to stay informed about changes to the platforms.

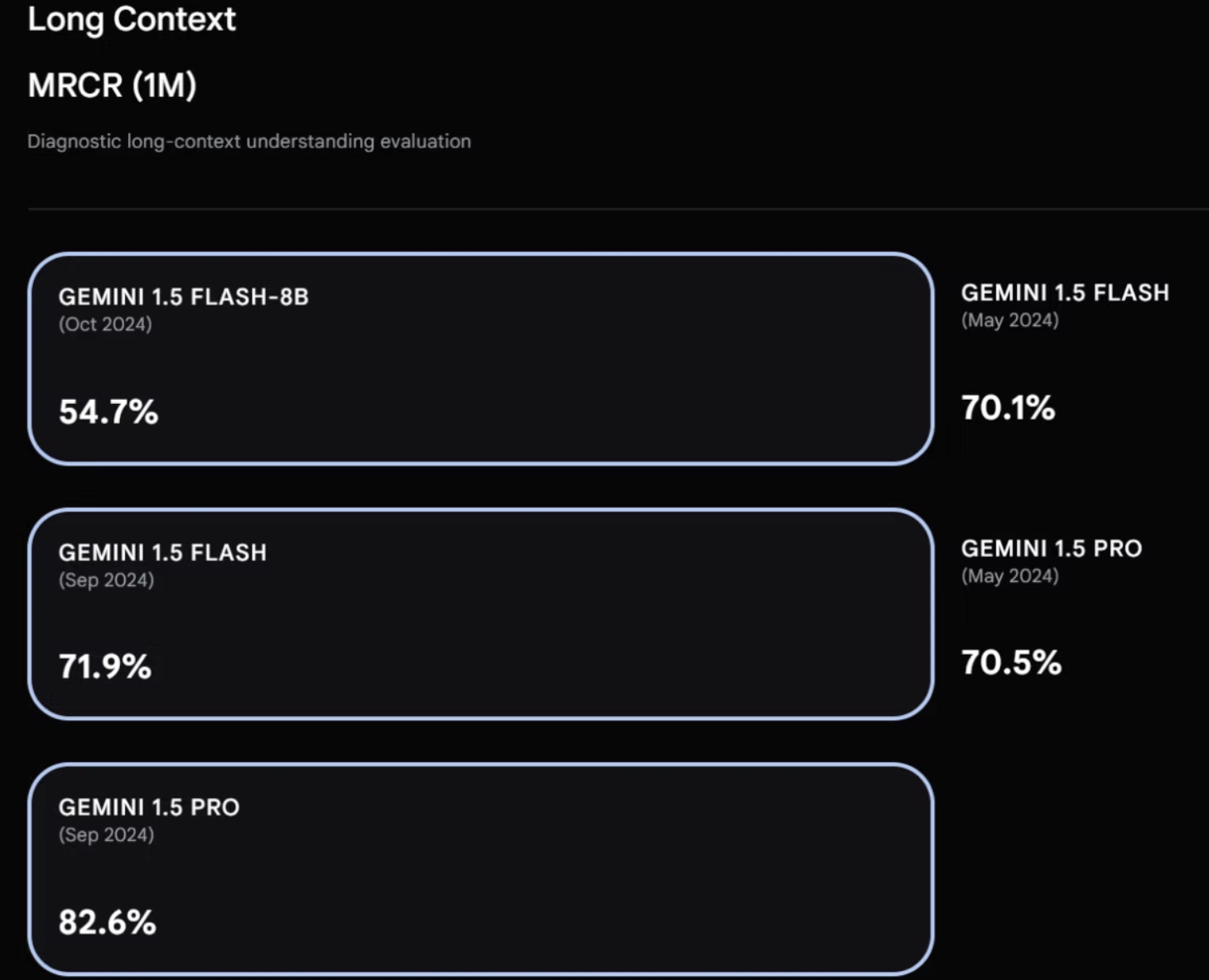

For instance, upon review of Google Gemini’s model documentation, a highly technical brand and product that relies on long, complex, highly contextualised explainers may choose to optimise their content to be more accessible to Gemini users.

This is because the Gemini model most frequently used by consumers, Gemini 1.5 Flash-8B, has a 54.7% accuracy rating for interpreting long context texts (as shown below). From a content strategy perspective, this could mean that shorter content may help with visibility in this model.

Major LLMs, such as Gemini, ChatGPT, Anthropic’s Claude, Perplexity, and Copilot, share data for their capabilities and knowledge sources. Understanding the differences between them can help you optimise your content's performance on each channel.

Provide feedback for accuracy

Even when an LLM isn't receiving fresh data, it still learns from its users, so feedback on responses is incredibly important.

Whenever you encounter an incorrect response about your brand, it is essential to provide feedback with the correct information. Ideally, this should be verifiable from the existing knowledge sources of the LLM. In its documentation, Google explains that feedback can “help make Gemini better” because “One important part of developing responsibly is expanding participation . . . . You can rate responses as good or bad and send feedback each time Gemini responds.”

In my experience, this approach yields results.

I was working on a website called MoneyHub, which was completely new and launched in summer 2023. In the summer of 2024, I asked Gemini [What is MoneyHub], and it listed other entities, but not the consumer comparison website I co-founded.

In March 2025, I asked the same question and found that the model was now aware of the website. When I asked for the URL of the site, Gemini listed a competitor's website. In response, I clicked the thumbs down button and provided the correct URL. When I asked the same sequence of questions again later, I found that Gemini returned the correct URL for the site.

Like any machine learning algorithm, LLMs require feedback to improve. So, updating them with better information about your brand is beneficial for both you and the tools.

Optimisations for search-augmented LLMs

Search-augmented LLMs have all of the elements of static pretrained models, but in addition, search results also form part of their corpus of content. This means that these LLMs can surface fresher data in a short amount of time. With regards to visibility, the methods you might use to optimise for search-augmented LLMs will be more similar to those used for ranking in a traditional search engine.

Using an SEO tool can help identify and optimise the content that is most likely to be surfaced by search-augmented LLMs, streamlining your SEO tasks and improving your chances of ranking.

When optimising for search-augmented LLMs:

Optimise for the relevant search engine

Check your core queries regularly

Prioritise pages that show in the LLM when internal linking

Optimise for the relevant search engines

For search-augmented LLMs, like Perpexity, Copilot 365, or ChatGPT Premium, your optimisation strategy needs to take into account both the LLM and the search engine behind it. Use Google Search Console to monitor your site's performance and visibility in search-augmented LLMs, track keyword rankings, and analyse impressions and clicks.

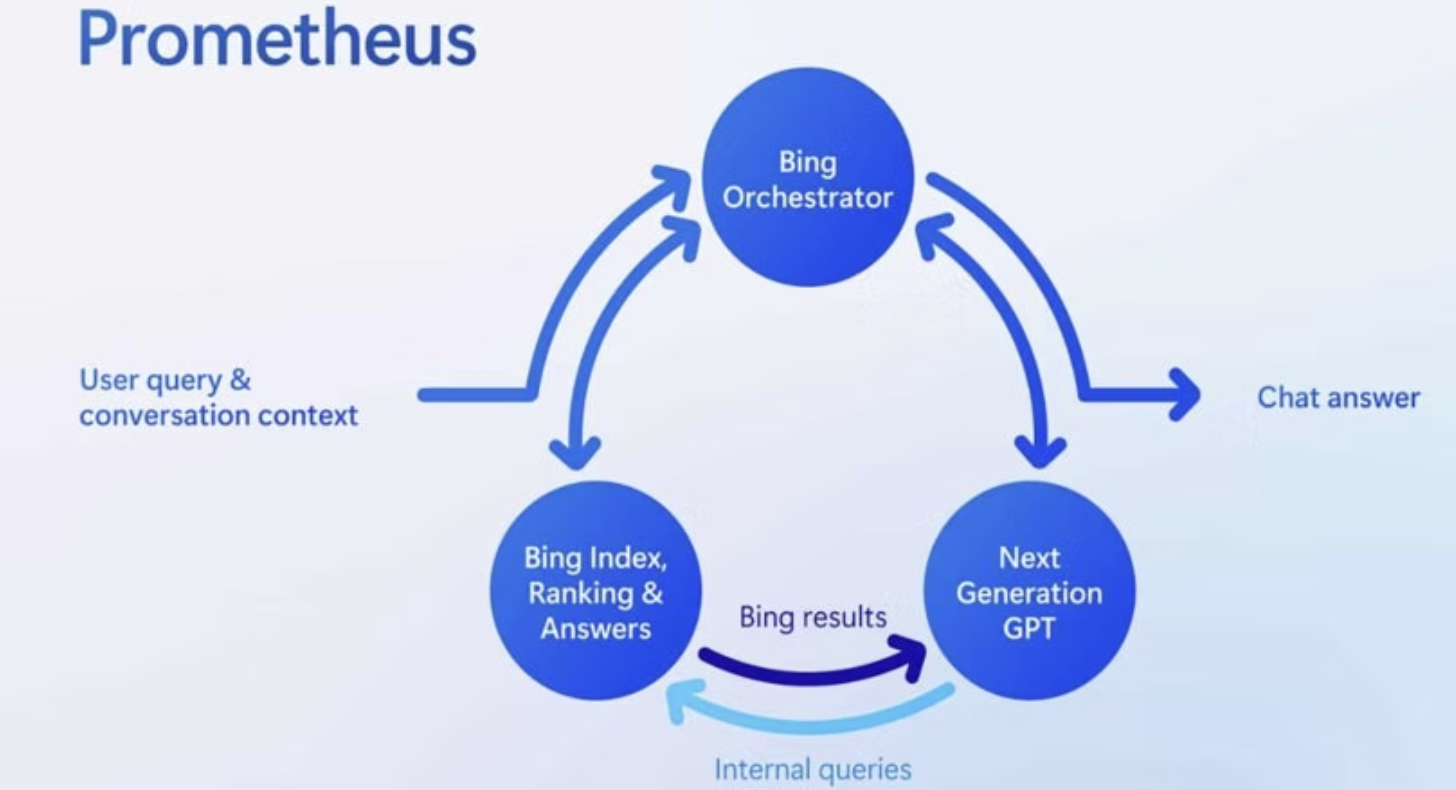

When Bing launched the first iteration of its search-augmented LLM, Copilot (then called ‘Bing Chat’), the company explained that it developed the Microsoft Prometheus model to ‘ground’ ChatGPT and reduce inaccuracies with information from Bing’s search results.

As illustrated above, this means that the interplay between the search engine and the LLM is dynamic and significant to the final output of the LLM. Further, this is specific to the search engine in question. That is to say: if you are looking to rank on Copilot, then it will be your Bing rankings (rather than your Google rankings) that will influence this.

Check your queries regularly in Google Search Console

Just as search engine rankings fluctuate, your visibility in an LLM that is dependent upon search engines is also subject to change. Spend some time auditing the results for your most important queries to see where your brand appears.

Remember to check again at regular intervals. This will help you to identify progress. LLMs can also assist with other tasks, such as content creation or data analysis, which can impact your brand's visibility.

Prioritise pages that are showing in LLMs for internal links

Search-augmented LLMs will very often show links as references for their results. When you see your brand’s domain in these links, this tells you that the LLM understands and knows this content, and that it now forms part of its corpus of information. This is akin to being indexed in traditional search

This also means that your content that the LLM already cites is a great place to start when planning your internal linking for even greater LLM visibility. For example, an online store can improve its LLM visibility by prioritising internal links from product pages that are already referenced by LLMs. To get more pages referenced in a search-augmented LLM, you should create links from the pages that are already referenced.

Optimisations for all LLMs

Now that you’re familiar with the differences between types of LLMs, let’s look at the optimisations you can apply to all LLMs. Some LLMs are integrated into fully featured desktop apps, which may require different optimisation approaches compared to web-only platforms.

Manage the crawl

Prioritise search-augmented LLMs first

Manage your brand entities

Get involved with LLM platforms

Optimise for the crawl

LLMs use crawlers in the same way that traditional search engines do. That means you can block or instruct them via your robots.txt file. You can track bot log reports, and SEOs can use additional methods to guide the crawl for desired results. Users can also download Microsoft Copilot to take advantage of its integrated crawling and AI capabilities.

Many LLMs make their user agent names public.

| LLM | User Agent(s) |

|---|---|

| ChatGPT | OAI-SearchBot, ChatGPT-User, GPTBot |

| Copilot | BingBot |

| Gemini | Google Extended* |

| Claude | ClaudeBot |

| Perplexity | PerplexityBot |

*Google does not explicitly state that Gemini uses Google Extended; however, this is the most likely user agent (based on observation).

When optimising for an LLM’s web crawler, take into account the purpose the LLM crawler serves.

For these tools, the aim is to use web content to improve the chat on the LLM. So, Prometheus uses Bing search to ‘ground’ ChatGPT before producing an answer in Copilot, and ChatGPT uses search results to “get you to a better answer: Ask a question in a more natural, conversational way, and ChatGPT can choose to respond with information from the web.”

The difference here (compared to classic search engines) is that the crawler is essentially a tool to help the LLM perform better. So the purpose of the crawl bot is to interpret and understand your content rather than to rank it.

From a crawling perspective, this means that crawlers do not necessarily need to see navigational pages or pagination pages. Instead, you should manage the crawl to prioritise content with information about your brand, products, and/or services.

Prioritise search-augmented LLMs first

This advice is a case of timing: Search-augmented LLMs receive fresh data all the time. If you make changes to your website and want to know if they're reflected in your LLM visibility, you won’t get real-time feedback from a static LLM. You will have to wait until the next update.

If you take steps to make your content visible in a search-augmented LLM, on the other hand, then you can make improvements and see your progress in real-time. Copilot features, such as AI-powered content creation, image generation, and workflow optimisation, support real-time feedback and optimisation, helping users track changes and enhance productivity instantly. And since many model groups, like ChatGPT and Copilot, feature both search-augmented and static systems, the information that the search-augmented systems receive is highly likely to filter down to the static models within the group over time.

Manage your brand entities

Both search augmented LLMs and static LLMs are trained on Wikipedia’s collection of over 65 million web pages. This information forms an essential part of Google’s knowledge graph and the semantic web as a whole.

This means that entities form a core component of LLM training and that brands with robust entities will have high visibility within LLMs. For example, creative brands that produce artwork can benefit significantly from strong LLM visibility, as their unique outputs are more likely to be recognised and surfaced by AI-driven tools.

Case in point?

Let’s look at Barbie.

If you use an NLP tool to analyse the summary for the 2023 film Barbie, entities like “Mattel”, “dolls”, and “toy industry” emerge, even though the summary does not explicitly mention Barbie being a toy.

Why does this happen?

Because language processing models can identify Barbie as an entity (particularly when close to Ken), and multiple data points across the semantic web tell these tools that Mattel owns both those entities. Further, the opening line of the brand’s Wikipedia page is “Barbie is a fashion doll.”

This means that when you ask an LLM, like Claude, Perplexity, ChatGPT, Copilot, or Gemini, to “name a fashion doll”, the first thing they answer is Barbie.

Whilst your business may not have the colossal intellectual property of an iconic brand, working on your structured data, Wikipedia presence, and wider semantic footprint can go a long way to improve the accuracy and visibility of your products/services in LLMs.

Get involved with LLM platforms

Don’t just prioritise visibility within these LLMs—get involved with the platforms. In addition to being included in the answers of LLM chatbots, brands are:

Creating content partnerships with LLMs

Creating custom GPTs

Making Perplexity pages

Exploring voice chat features to enable users to interact with their brand through AI-powered conversations, enhancing engagement and streamlining communication

Partner with LLMs

At the start of 2024, OpenAI started courting major publishers to become content partners; soon, other LLMs followed suit. At time of writing, these partnerships include:

OpenAI deals with Hearst, LeMonde, Prisa, VoxMedia, Condé Nast, The Atlantic, GEDI, NewsCorp, and Time

Perplexity Publisher Program deals with Time, Entrepreneur, The Texas Tribune, and Der Spiegel

Some of these partnerships involve tools where portfolio copilot combines image generation, content creation, and portfolio management into a unified platform, streamlining creative workflows for publishers.

For teams from major publishers, there is potential to align yourself directly with LLMs to increase your visibility. For others, prioritising link-building campaigns to appear in publications with direct partnerships is worth considering.

Create custom GPTs

Not only do custom GPTs allow you to get your brand in front of ChatGPT users, but the URLs also rank on Google.

Creating a custom GPT tool for your potential customers can be seen as a PR move, but also an opportunity to make a curated space for your brand in the ChatGPT ecosystem, and to drive traffic to your website from ChatGPT. For example, brands can develop custom GPTs such as an AI picture generator, which creates high-quality visuals and artwork from text prompts, to engage users and drive traffic. For instance, the Diagrams: Flowcharts & Mindmaps GPT has links built into its outputs that drive traffic directly to the brand’s website. This is an opportunity that should not be underestimated.

Similarly, Perplexity’s ‘Perplexity Pages’ act as curated brand experiences directly within the app.

Common LLM mistakes to avoid

While large language models like AI agents offer powerful capabilities, it’s important to use them wisely to maximise their benefits.

One common mistake is relying too heavily on AI-generated content, which can result in a lack of originality and may negatively impact search engine rankings. Failing to optimise content for search intent can also lead to poor search results and reduced online visibility.

Additionally, neglecting essential SEO tools such as meta tags can limit the reach and effectiveness of your content. Over-reliance on AI agents for tasks that require human creativity and judgment—such as writing code or developing complex, nuanced content—can also be problematic.

To avoid these pitfalls, it’s crucial to combine the strengths of AI agents with human expertise, ensuring that content remains authentic, relevant, and aligned with your brand’s goals.

Always review AI-generated outputs for accuracy, and use SEO tools strategically to enhance your online presence and achieve better results in search engines.

LLM optimisation: blend tried-and-true tactics with new technology for brand success

Though the technology is dazzling, SEO professionals can bring tried and tested methods for boosting audience discovery into the process of LLM optimisation. From managing crawling to coordinating link-building campaigns and adopting new channels, there are gains to be made for proactive marketers.

AI agents like Microsoft Copilot, ChatGPT and Gemini are transforming the way individuals and businesses approach daily tasks and creative projects.

For businesses, AI agents streamline the creation of engaging content, support customer interactions, and open up new avenues for creativity.

Optimising for LLMs can also spark inspiration and help brands create stunning visuals through advanced image generation features. It's important to ensure brand visibility across platforms, both on-site and off-site.